Content created: 2005-07-21

File last modified:

Go to

Essay 2,

Essay 3,

Metal Ages,

Mesopotamia.

The Neolithic (Essay 1)

The “Agricultural Revolution”

D. K. Jordan

Outline

-

What Came First: Foragers (Link)

- The “Agricultural Revolution” (Link)

- Horticulture and Plow Agriculture (Link)

- Taming the Wild Grain (Link)

- The Possibly Critical Importance of Beer (Link)

- Unnatural Selection: The Biology of Agriculture (Link)

- Intermediate Adaptations: Hunting Gardeners (Link)

- Intermediate Adaptations: Terminal Foragers (Link)

-

The Spread of Farming (Link)

- Review Quizzes (Link)

1. What Came First: Foragers

Foraging. For ninety-nine percent of the period that humans have been living on this planet, they have been foragers. In technical language, “foraging” is the cover term for hunting (land animals), fishing (in rivers and lakes or in the ocean), and gathering (plant materials, honey, some insects, and shellfish).

[Note 1]

Foraging still exists. We eat seafood and wild honey and berries picked along the roadside. People shoot deer, cast for bass, and gather wild asparagus. But such products play a trivial role in modern diet. Very few, if any, societies exist today that exist only by foraging.

The reason is simple enough: foraging does not usually sustain a large, dense, non-migratory population, and therefore foraging peoples were easily attacked and defeated by larger groups sustained by agriculture or pastoralism. The pressures of competition from such peoples drove the much smaller foraging societies into more and more marginal environments (sometimes called “refuge areas”), or simply assimilated them into broader social systems. This essay is about the rise of agriculture and pastoralism. But first, it is useful to consider some of the properties of foraging life ways to which food-raising stood in such stark contrast.

It is important to remember that "hunting" and "gathering" do not always refer to the collection of things to eat. In addition to their importance as fuel, inedible materials like palm fiber (above) or tree bark (below) have always been critical to creating tools, shelters, and clothing, just as have the bones and hides of animals.

Photo by DKJ

Some few foraging societies did survive, more or less intact, into the early and middle years of the XXth century, and we therefore do have ethnographic accounts and ethnographically based reconstructions of what foraging life was like in a range of environments. Perhaps the most famous accounts have been of the Eskimo (including the Inuit, Iglulik, Yuit, and other groups) in the Arctic, of the Khoisan speakers (especially the San) of the southwest African desert regions now part of Botswana, Namibia, and adjacent lands, and the numerous Pygmy groups (such as the BaMbuti, the BaBinga, and the Aca) inhabiting the rainforests of the Congo River drainage. Until modern times none of these environments —arctic, desert, jungle— was sufficiently attractive to agricultural peoples for them to settle in them.

The ethnographic reporting therefore describes foragers living in marginal environments, and thus under environmental stress, but it is all the ethnography we are going to get. Despite that bias, it appears that a number of important generalizations can be made.

Band Societies. Foraging communities were limited to the number of people who could be supported by the animal and plant foods naturally occurring around their home. Occasionally a foraging group lived amid truly rich, year-round resources, able to support a relatively large population. This was true of prehistoric northern European reindeer hunters and the tribes along the northwest coast of North America. But far more usually the population that could be supported was very small —no more than one or a few families— and constant movement was necessary as the resources of the immediate area varied seasonally or became exhausted. It is tempting to suspect that in earlier centuries and millennia, the average foraging group size might have been larger, since foragers would have inhabited many environments from which they were driven in recent centuries. However archaeology does not support that suspicion. Despite occasional Upper Paleolithic sites that appear to have been home to as many as 300 people at a time in some seasons, the usual group size, the one we should assume is a kind of default, was a mere 25 souls, more or less. Such a group is referred to as a “band.”

Division of Labor by Gender. In the majority of foraging societies that we know about, men did most or all of the hunting and fishing —this seems inevitably to have been the case if the prey animals were large, like bison or whales— while women did some or most of the plant gathering, often assisted by children or, at times, men. [Note 2]

Because of the more frequent use of stone for hunting implements, there is generally more archaeologically visible residue from hunting than from gathering, so we tend to know more about the evolution of hunting techniques than we do about the plants that people have gathered and consumed, and many writers seem to assume that men played a more important role in foraging societies than women did. (Some studies of accidental clay impressions of Upper Paleolithic nets, summarized by Pringle [1998] [Source], remind us that not all hunting is done with stone tools, and in modern ethnographic contexts women are often associated with net making and net use, both “hunting” activities.)

A defined division of labor need not make one sex superior to the other, and most students of foraging societies have commented upon the sense of gender equality and gender complementarity they exhibited.

Leadership. Ethnographers usually noted that in societies so small in scale it was unnecessary (and nearly impossible) to maintain a system of formal political offices. Everyone was related to everyone else and most lived long with each other, so the most important social statuses were kinship relations, and everyone’s skills, strengths, and weaknesses were known to everyone else. The term situational authority is sometimes applied, since, if there was a need for “leadership” for any particular activity, be it hunting, singing, or delivering children, it usually fell to whoever was considered best at it. (A society without formal leadership offices is referred to as acephalous —literally “headless.”)

Above:

Above: Shoes Made From Wild Yucca Fibres

Below: Skirt Made from Strips of Willow Bark

Obviously humans require more than just food, and both clothing and shelter were important uses of non-food foraged materials. The XIXth- and early XXth-century clothing items here were made of plant fibers gathered by the Kumeyaay people of San Diego County, California.

Riverside Museum

This did not mean that some people —great hunters for example— did not at times become boastful or competitive. But in most foraging societies such attitudes were frowned upon. For most foraging societies, ethnographers described a good deal of gossip and bickering, usually interpreted as having the effect of restricting the pride and boastfulness of a would-be “leader” from disrupting the egalitarianism of daily life. (A possible exception might be the shaman or trance-healer, who needed the ability to go into trance, an ability which was usually described as limited to only a few people.)

Gossiping and bickering were usually also important modes of dispute suppression, although in extreme cases members might leave to found a new group or to join a different group. Conventions encouraging marriage between bands or prohibiting it within bands led to very widespread kinship networks in most of the foraging societies ethnographers were able to study, so the possibility nearly always existed of using these kinship connections to change bands, as a result of disputes or merely as an adjustment to excessive band size.

Logically enough, in foraging societies, or anyway in nomadic ones, possessions were few and food was perishable, so a strong ethic of sharing and mutual support was pervasive. Indeed, one of the most difficult adjustments that foragers have had to make when they have assimilated into agricultural societies and modern states, has been the violence done to their sense of the moral necessity of sharing, as we shall see. And one of the complications reported by ethnographers studying foraging peoples was the expectation that their equipment —paper, pencils, cameras, clothing, medicines, and so on— ought to be public property for immediate consumption.

Although foragers were just as thoughtful and just as intelligent as other populations, none developed (or particularly needed) systems of writing, so our knowledge of their intellectual life is therefore limited to what ethnographers collected. There is tremendous variation in the record, but most ethnographers have described bodies of myth, sometimes linked to systems of taboo used to explain illness or deviance, or to genealogies used to explain the human relations of the band. Most foraging bands had ideas about spirits of various kinds, that could influence human life, and there were nearly always traditional ways of communicating with them, or seeking their favor or assistance, or defending against them. Not surprisingly, foraging peoples always possessed startlingly complete knowledge of the plants or animals on which they were dependent, and of the environment in which they lived.

Return to top.

2. The “Agricultural Revolution”

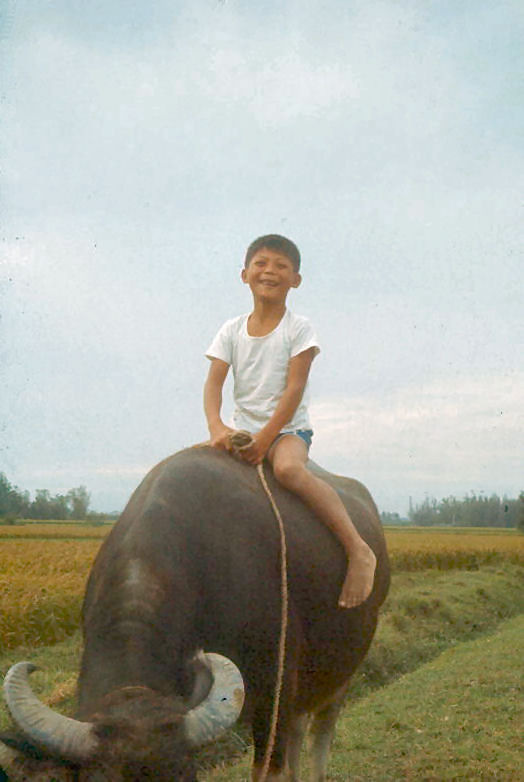

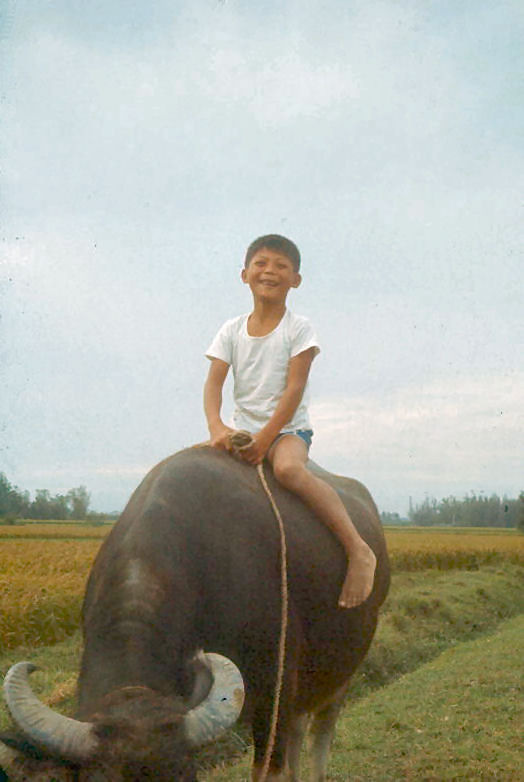

Small Boy Tending a Water Buffalo Beside a Rice Field

(Taiwan, 1960s) The domestication of animals involved a suite of genetic changes, many of which are visible in their physical remains. Among the changes not normally visible are behavioral ones, making them more docile and willing to accept human governance.

Photo by DKJ

The Neolithic. Few inventions could have made more difference to the human career than one that would allow human communities both to increase in size and to remain in one place all year around. Larger population size would have meant new and more specialized social statuses and vastly more complex kinds of relations between people. Not having to move would have meant the possibility of permanent buildings (especially food storage facilities) and the construction of things too heavy to move, such as pottery, furniture, substantial shelters, or such industrial equipment as looms, water works, or millstones.

Both larger population size per community and the ability of communities to remain in one place resulted from the successful domestication of plants (agriculture), and, to a lesser extent, of animals (herding). The term “Neolithic” (“New Stone”) is often applied to this period or stage in human history

The term “Neolithic” strictly refers to a “new” way of making stone tools —not necessarily only agricultural tools— namely by grinding the surface of the stone rather than (or in addition to) chipping it. In the earlier, Paleolithic (“Old Stone”) age, stone tools were simply stones, sharpened by breaking them. With some kinds of stone, skillful chipping allowed the production of extremely sharp edges, and even long blades. But it was effective only with the most glass-like stones —flint, obsidian, and chert in particular— all of volcanic origin, where it was possible to knock or press off small flakes to leave a sharp edge. But some tasks, such as cutting down trees for timber or to clear fields, do not require a sharp edge so much as heavier, sturdier tools. The same rocks that have the sharpest edges when chipped, are too fragile for many heavy tasks.

…slate, granite, schist, and limestone … are difficult or even impossible to flake. Toolmakers using these materials had to start by laboriously pecking the rock into the desitred shape, then finish the tool by grinding and polishing. Most of these early ground stone tools were made for woodworking — items like axes, chisels, and adzes.

(Christopher J. Ellis 2013 "Paleoindian and Archaic Hunter-Gatherers" IN Marit K. Muston & Susan M. Jamieson (eds) Before Ontario; The Archaeology of a Province. Montreal: McGill-Queen's University Press. P. 41.)

Ground stone tools were a whole different style of tool from the more delicate points and blades, and they are considered in most cases to be the mark of the “Neolithic,” or “new stone” age in most parts of the world.

Not surprisingly the term “Neolithic” is therefore normally associated over virtually the entire world with the (1) the development of food production, largely or entirely superseding food gathering. (2) fixed residence (with more substantial architecture), (3) pottery production, (4) increasing population density (with political organization to manage it). The term “Neolithic” is not normally applied to societies with (1) writing, (2) techniques of metal working, and/or (3) large-scale political organization.

(More About Neolithic Ground Stone Tools)

The Neolithic brought a need for new tool types, such as axes suited to clearing brush and trees. Polishing rather than chipping also allowed the use of a wider range of stone to be utilized as specialized tools. This museum diorama shows a Neolithic ax-head being polished along the south coast of China.

Hong Kong Museum of History

Obviously Neolithic communities did not grow up at the same time in all parts of the world. Nevertheless, some authors speak of a Neolithic “period” or (better) “phase,” even though dates vary from location to location. [Note 3]

Because of the dramatic effects that domestication had on the possible human ways of life, the first appearance of agriculture in the archaeological record is often called the “agricultural revolution,” “Neolithic revolution,” or “food producing revolution.”

It is clearly not correct to think of agriculture as being “invented” by some Upper Paleolithic genius who suddenly one day decided to plant things. Indeed, evidence suggests that the transition from a food-gathering way of life to a food-producing one was a very gradual process, one that went on very slowly, more or less independently, in several different parts of the world. Agriculture seems, from what we now know, to have developed independently in as many as ten locations, including the Near East [Note 4], Mexico, northern China, and Peru. Quite probably there was some amount of deliberate planting and harvesting in virtually all areas of human habitation even before the influence of these focal regions arrived and supplemented it.

Return to top.

A. Horticulture and Plow Agriculture

Some specialists define agriculture very narrowly as planting, raising, and harvesting crops by processes that make use of a plow. They distinguish this from horticulture (or “gardening”), defined as planting, raising, or harvesting crops without making use of a plow. Typically, horticulturalists use a “dibble” (or “digging stick”) to make a hole in the ground, into which a seed or cutting is placed. They also use a hoe, sometimes made of an animal shoulder blade, a flat rock, or a flat piece of strong wood (or later metal). It is used both to move dirt and to chop weeds out of the way.

Hoes were made from wood, stone, or, as here, the shoulder blades of large animals.

Knife River Indian Villages National Historic Site, North Dakota

Plow agriculturalists use the plow to turn the soil over in furrows; they then scatter (“broadcast”) the seed over the newly plowed field and then smooth it out to cover the new seed. By turning over the soil, a plow redistributes fertile and depleted soil, often bringing richer soil from greater depths to the top, as well as tearing up and burying weeds that might otherwise compete with the new seedlings for nutrients. Ideally, the weeds rot under the surface of the soil, fertilizing it.

The distinction between horticulture and plow agriculture is useful because it captures a difference in scale. For many cultigens, plow agriculture makes larger crops possible on the same land. It also uses the labor of draft animals (as well as their fertilizing dung) in the process of plant production, increasing the crop yield per unit of human labor. For this reason, in suitable soils it is more efficient for all but small or specialized gardens and tends to supplant horticulture when the two are known to the same peoples. For present purposes, the distinction between plow agriculture and horticulture is largely irrelevant: the significant issue is human ability to produce food. (By using the expression “plow agriculture,” we can preserve “agriculture” as a cover term.)

Chinese Palm-Fiber RainwearIn China, raincoats and rain hats were traditionally made of palm fiber or rice straw. (The capes are known as suōyī

蓑衣 in Chinese and the combination of cape and hat as suōlì

蓑笠.)

Although it is convenient to see non-food uses of rice straw as essentially a by-product of rice grown for food, in the case of palm, the use of fibers is often primary and food use often secondary, especially in varieties of palm that do not produce edible dates.

San Diego Chinese Historical Museum

Non-Food Agriculture. Some crops raised for purposes other than food also count as agriculture. Examples are bamboo for use in construction; hemp, flax, or cotton for use in clothing; gourds for use as containers; or mulberry leaves used to feed silk worms. Many crops that we think of as primarily food crops in fact have secondary uses that can be just as important. For example, rice is a critical food crop across Asia, but rice straw is also a critical cooking fuel. “Grass farming,” the deliberate cultivation of grass in summer to be harvested and used to feed animals in winter, is another example of non-food agriculture, although a less clear one, since the animals are often a source of food. Cotton is raised for its fibers, but its oil-rich seeds can be eaten or pressed for edible (and flammable) oil (Dahl 2006:39). [Source] Apparently no society raises non-food crops without also raising food, but nearly all societies that raise food crops also raise some non-food crops.

First Farming. Specialists are not certain what brought about plant and animal domestication. Among modern peoples, even nomadic foragers engage in some deliberate manipulation of plants. Some researchers have argued persuasively that this shows they understand perfectly well how to plant seeds or cuttings to produce additional food, but that this knowledge is of only limited use to nomads. It follows that this should be our default assumption about the late pre-Neolithic peoples as well: they knew a planted seed would grow, but didn’t see much point to bothering about it.

However it came about, significant domestication began getting “invented” over most of the planet within a few thousand years, beginning about 8000 BC, so one has to assume that the cause of the change must have been something that happened planet-wide.

One of the best candidates is climate change. The ice age had just ended, and with the warmer climates came changes in plant life, some of them pre-adaptive to human exploitation for food. At the same time, the warming climates may have been responsible for some population increase in the human communities in peripheral areas, and for the spread of humans into new areas. Increased pressure on traditional foraging resources could have led to various “temporary” or “minor” expedients to increase the food supply, possibly including experimentation with deliberately nurturing edible plants and animals.

The Near Eastern development seems to have been the earliest, beginning about 8000 BC. A principal domesticate of this area in early times, as today, was wheat, and our story of agriculture begins with this.

Return to top.

B. Taming the Wild Grain

Robert Braidwood was an archaeologist well known for his work in seeking the earliest traces of domesticated wheat in the Near East. Previous investigators, noting settled agricultural life in the river valleys, had assumed that domestication had occurred there, but had found no evidence. Working in the middle years of the XXth century, Braidwood chose to investigate an area in the foothills of the Zagros and Taurus Mountains, which form an enormous arc flanking the famous fertile crescent, which ranges through the modern nations of Iraq, Iran, Turkey, Syria, Lebanon, and Israel.

The Fertile Crescent & Some Sites Mentioned in the Text

(After Swartz & Jordan 1976:374 [Source])

The Zagros-Taurus foothills were selected on Braidwood’s hunch that the domestication of wheat and barley and of common domestic animals must have occurred first in an area where they all existed in a wild state. Today, as in ancient times, a wild species of barley grows in the area, as well as a small-grained wheat called “einkorn” and a larger-grained kind called “emmer.” In addition, there were wild sheep, goats, hogs, cattle, and horses, all destined to become domesticated mainstays of the Near Eastern farm and barnyard by earliest historic times.

The Site of Jarmo. Braidwood and an interdisciplinary team of specialists excavated a site called Jarmo in the heart of the hilly flanks of the fertile crescent in northeastern Iraq, about 250 km from Baghdad.

(Wikipedia link.)

There was nothing very special about Jarmo, except that (1) it was located in the place where the wild ancestors of domesticated forms grew, and (2) it was occupied just before the earliest known domesticated forms were grown. So it seemed to promise a snapshot of life just as domestication was occurring.

Display of Domesticated Cereals in the Cardiff Museum, Wales. Left to right: Emmer wheat, einkorn wheat, club wheat, and two kinds of barley. Click to enlarge.

Cardiff Museum

In the lowest (hence earliest) layers of the Jarmo deposits, dating between 6750 and 6500 BC, the researchers found goat and sheep bones from animals that seem to have had some of the characteristics of domesticated breeds. Grain from that long ago is, of course, unlikely to be available for examination today. Nevertheless, surprisingly, there was also evidence of domesticated wheat and barley because some kernels were preserved by accidental heating in or near ancient fires (which converted them to charcoal and prevented them from rotting).

There were also impressions of kernels in some of the clay bricks, since the bricks had been made by mixing straw with mud for greater strength and sometimes grains had remained attached to the straw, leaving their impression in the hard-packed bricks for archaeologists to observe thousands of years later. In other words, Jarmo at this early period seems to have been the site of a settled agricultural village of earlier date than any of the communities archaeologists had discovered by digging in the river valleys. Through the window of unpretentious little Jarmo, the hilly flanks zone was showing its importance.

Further archaeological attention in the “hilly flanks” brought a number of earlier sites to light. The best known of these, and among the oldest found so far, are Zawi Chemi Shanidar and Karim Shahir, both in Iraq. These sites provided sickle blades and millstones (but no grain) dating from nearly 9000 BC, over a thousand years earlier than Jarmo. Probably by the time of the Jarmo occupations, herding and agriculture were already primary modes of subsistence in the hilly flanks area, involving domesticated goats and sheep and possibly hogs, and including the cultivation of barley, einkorn, and emmer.

In 2013, archaeologists working at Chogha Golan, a thousand kilometers to the east, on the Iranian side of the huge Zagros mountain chain, announced the documentation of a sequence extending from almost no domestication to nearly full domestication between 9700 BC and 7800 BC. The full process seemed to have taken nearly two thousand years, they reasoned. (Bower 2013:13) [Source]

The Zagros Mountains at Oshtoran Kooh, in Iran

(by Wiki66, from Wikimedia Commons link)

Return to top.

C. The Possibly Critical Importance of Beer

None of this speaks to ancient people’s motivations, of course. Although it is easy to imagine foragers happily increasing their supply of some foods, many of the plants destined to become the most important foods for agricultural peoples were never significant for foragers. Modern wheat has large, plump, edible grains, easily removed from the chaff, and reminiscent of pine nuts. But that was not true of wheat before a great deal of genetic modification. Wild ancestors of modern cereal grain strains were far more similar to modern crab grass than to modern food grains.

That is not to say pre-agricultural peoples never used wheat and other cereal grains. A site in Israel produced several charred seeds of (presumably wild) Emmer wheat dating to about 2100 BC, for example.

In 2018 archaeologists announced possible bread crumbs at a Natufian site in Jordan. To everyone’s surprise, the provisional date was about 12,400 BC. So it is possible that occasional groups did collect enough wild grain to be cooked, although probably not routinely.

In 2015 at an Upper Paleolithic, Gravettian site in southern Spain, archeologists were able to extract from a flat, slightly polished stone the residue of wild oats that had probably been ground on it. Presumably the goal was to create a flour that could be mixed with water and cooked into gruel or flatbread, either for immediate consumption or to be stored for future use. The find dates from about 30,000 BC. It is extremely unlikely that wheat played a major role in the diet in sites like these, but, like most plants, it was apparently considered edible.

Sheep Horn Beer Mug (Scotland) Photo by DKJ

Why would tiny wild grains be attractive to most foraging peoples? They are a nuisance to harvest and difficult to separate from the chaff. They are hard to eat without a lot of preparation. They do not offer much to eat on any one stalk, let alone any single grain. And finally, if one tries to eat them right off the plant, they don’t taste particularly good. So why would anybody bother with wheat anyway? Bread and gruel, the commonest ways of consuming cereal grains in antiquity, would probably not have occurred naturally. So what could possibly have tipped ancient people off to the fact that cereals like wheat and barley were edible at all, let alone potentially important foods?

Beginning in the 1950s some scholars explored the possibility that the earliest use of wheat and barley may have been in combination with dried fruits as ingredients in beer. Since grain fallen into water can sometimes ferment naturally, a kind of primitive proto-beer could more plausibly have been produced by accident than could proto-bread, or perhaps even proto-gruel. Bread, in this reconstruction, would then have evolved initially from a grain product and would have its origins as a step in brewing.

One of the most persistent advocates of this position was Solomon Katz of the University of Pennsylvania, basing his view partly on the fact that barley, an even bigger nuisance than wheat as a bread grain but preferable for making beer, seems to have been domesticated slightly earlier than wheat. Unfortunately, our earliest solid evidence of beer drinking in the Near East still came from several thousand years later than earliest domestication. (Earliest evidence of beer drinking at UCSD dates from the admission of its first graduating class. [Note 5])

Chinese Jugs These modern Chinese jugs were sold full of liquor, but throughout history similar jugs have also been used for other purposes. Only by studying the chemical remains in old vessels can archaeologists be sure how they were used.

Photo by DKJ

By 2003, scientists in China had discovered residues clinging to the insides of broken bits of ceramic jars showing evidence of a fermented grain beverage, almost certainly rice-based, having been consumed at a site called Jiǎhú 贾湖 in central China’s Henan province about 7,000 BC. Unlike barley and wheat, rice does not provide its own yeast for fermentation (reducing the probability of it happening by accident), but traces of tartaric acid suggested that the fermentation agent had been grapes (McGovern 2003: 314-315). [Source] From the chemical analysis, the Jiǎhú rice beer appeared similar to a fermented wine-and-beer beverage made in Turkey over six thousand years later, about 700 BC. Separate evidence for both rice and grapes was then found at Jiǎhú, which, as we shall see below, now provides some of our earliest evidence so far for the development of agriculture in eastern Asia. (Or were people fermenting a wild product?)

In 2016 another archaeological site, in central China's Shaanxi Province, turned up various items that seemed potentially adapted to beer production: stoves, grain husks, distinctive pottery forms that seemed to be specialized to various stages of beer making, and residual chemical deposits inside some pottery vessels. In short, it was a kind of brewery. The find dated to about 1000 BC. In view of the Jiǎhú findings, beer was hardly surprising in China at 1000 BC, although not previously found. But the raw materials did turn out to be unexpected: The chemical deposits seemed to be made up of material from both wild and domesticated grains (and probably some tubers), and the grains prominently included barley. Barley was originally a Near Eastern grain, and barley is also the grain that works best in beer making. The date of 1000 BC was roughly a thousand years earlier than other evidence for the introduction of barley into China.

The implication that archaeologists have tentatively drawn is that Chinese already knew about beer, and that barley and the knowledge of how to turn it into beer came into China from the Near East much earlier than the first Chinese use of barley for anything else.

Excursus: Beer is made from fermented grain. Fermentation involves two steps:

(1) Carbohydrates in each seed are converted into sugars through the action of its resident enzymes. This is called “malting” by brewers, and it occurs naturally when the seed germinates. The enzyme action can be stopped if the malted sprout is then dried, leaving the dry sugars. Barley is unusual in having particularly active enzymes, providing optimal malt, even if mixed with other grains.

[Source]

(2) Yeasts are a normal part of the air we breathe in most regions —as well as occurring in our bodies, on our skin, and in our saliva. Although there are over a thousand species of yeasts, some of the most commonly occurring ones, when mixed with dampened malt (to produce “mash”), consume the sugars and excrete alcohol and carbon dioxide. (The gradually rising concentration of alcohol eventually kills the yeasts.)

(3) Fruits, like everything else, are generally covered with yeasts. Unlike grains, they are already full of sugar, so they ferment easily without malting. In addition to turning into wine, fruit residues appear to have been used as “starters” for some ancient beer. (If you leave blueberries in a bowl of wet breakfast cereal —or merely spit into it— it should, in theory, turn into primitive beer. It will be gross and disgusting, but it will count as beer, at least for archaeological purposes.)

Fermenting Grain in Rural North Carolina Storefront Micro-Brewery

Even if beer was the “discovery” that led to bread and gruel, it was destined to play a much smaller role in human life than other ways of consuming grains. After all, today rice, maize, and wheat provide about a third of all calories consumed by human beings. To provide the carbohydrate basis for settled agricultural life, wheat would have to have been used in non-fermented form and consumed in greater quantity than would be possible with beer, it is reasoned. But beer may very well have been the first step.

Beer also had other roles to play. Perhaps most importantly, because it contained some alcohol, it carried fewer harmful bacteria than most available drinking water. Fermentation more broadly also had a major role in transforming and preserving food. Modern examples range from wine and soy sauce to sauerkraut and kimchee.

Return to top.

D. Unnatural Selection: The Biology of Agriculture

Modern wheat (left and below) has substantially larger individual grains than so-called "broom grasses" that occur as weeds or are occasionally used for animal feed or fiber.

In addition, in domesticated wheat the "non-fractionating" base of each kernel and of the whole head clings firmly to the stem until harvest, but the casing or chaff is easily detached. Wheat of this kind can be nibbled as a snack in the field. That was not true of its wild ancestors or of broom grasses."

Photos by DKJ

Fractionating Wheat. Domestication has two aspects: human knowledge and the biology of the plant or animal in question. The domestication of wild wheat provides an instructive example of the interplay of forces involved with the origins of many domesticated crops all over the world. Wild wheat is different from domesticated wheat in a number of respects. That is how archaeologists can tell them apart, and that is why domesticated wheat can be effectively used for human consumption. The grains of any type of wheat are arranged in a head, or spike, at the end of a stalk. Each grain is enclosed in a kind of shell, which is joined to the spike at a node. Wild wheat, for reseeding itself, has highly fragile nodes that dry out when the wheat is ripe and drop individual grains from the spike very easily. Such wheat is accordingly called fractionating. Domesticated wheat is harvested by carefully clipping the stalks and bringing them home for processing. It would be very hard to harvest if the grains tumbled off the stalk at the slightest breeze.

In ancient times people presumably used wild wheat anyway, in spite of these handicaps. However, the wheat that they were able to harvest successfully tended to be the grains of wheat that clung best to the stalks. There is quite a bit of genetic variation in wheat, and apparently on some aberrant stalks nodes were tight enough that the wheat kernels did not drop at all. Such a mutation would not have seeded itself efficiently and therefore would not have propagated itself successfully as a wild strain, but it would have been the very thing that was easiest for early farmers to harvest.

If the farmers dropped seeds around their settlement on reaching home or in the course of processing wheat, the seeds would have tended to be of wheat that was easiest to harvest, the kind that did not naturally drop its kernels. More and more of the wheat that would have sprung up near human settlements would have been of the “non-fractionating” variety. In a comparatively short time, the natural low proportion of mutant, non-fractionating stalks would have turned into a high proportion of non-fractionating stalks in the now “artificial” fields of domesticated wheat.

Archaeologists have two main sources of evidence for the change from fractionating (wild) to non-fractionating (domesticated) wheat. One is the great variety of differences between domesticated and wild wheat that came about in the course of early harvesting and cultivation. Another is the study of microwear on stone blades used as sickles to cut through the silica-bearing stalks of grasses like wheat. [Note 6]

Neolithic flint sickle blade mounted for cutting, displayed with Einkorn. The presence of microscopic "sickle sheen" on the stone blade can be evidence that it has been used for cutting high-silica grass stems.

(Hunterian Museum, Glasgow)

Other genetic changes came about in wheat as it was transported out of its home region in the hilly flanks of the fertile crescent into new environments. For example, as people planted wheat in drier areas, the mutants that were suitable for dry climes survived in higher proportions than those that depended on wetter weather, gradually producing strains of wheat suitable to the new environment. The same happened with wetter climates, and with colder and hotter zones. In some cases the introduction of wheat complemented other crops almost perfectly. For example, in the American Southwest cold-tolerant wheat introduced after the Spanish conquest could be planted in the fall, after the maize (corn) harvest, and harvested in the spring, when maize was just being planted, substantially increasing the available grain resources. It is this genetic variation and resultant enormous adaptability that leads to wheat’s extraordinarily wide distribution over the world today.

The genetic variation in wheat, with its constant production of mutant forms and consequent potential for transformation in new situations, is unusual. Most food products were less easily transformed from wild varieties to domestic ones, and some show virtually no genetic differences at all.

Animals. The domestication of grains brought with it the domestication of cats. Stored grain universally attracts both insects and rats. Cats have no interest in grain, but are natural predators upon the insects (especially weevils) and rats. Arguably grain domestication would have been impossible without the participation of cats.

Other common participants in Neolithic human communities were usually dogs, pigs, and goats. The domestication of dogs (from their ancestral wolves) seems to have occurred several times in human history, all of them in the context of pre-Neolithic, hunting societies. In Neolithic times, dogs would rarely have been used in hunting, but would have been valued for their use as watchdogs and perhaps, like pigs, in their willingness to eat human dung, cleaning living sites. All of these animals, as well as goats, chickens, geese, and ducks, are early and edible domesticates, found virtually universally in Neolithic sites. (All of them were also potential carriers of disease, but that rapidly becomes a different essay.)

Domesticated Sheep Horns

Horns cease to have much defensive use in domesticated animals and their forms often change

(Photo by DKJ)

Changes occurred in early domesticated animals that were similar in broad outline to the changes in plants. For example, domestication of Near Eastern sheep and goats (and therefore their human protection from natural predators) removed heavy selective pressures that had favored the strong, straight horns necessary for self-defense in the wild. In domesticated herds, mutant genes producing aberrant horns often did not get eliminated from the population, and a gradual change in horn (and bone) forms occurred after a few generations of domestication. This often enables archaeologists to establish whether a given animal was hunted or raised.

The creation of domesticated strains of plants and animals results from the artificial selective pressures (or removal of natural selective pressures) consequent upon human contact. This provides a convenient guide for the archaeologists, but it is not its only significance. It also has implications for our view of how domestication came to be. Our example of wheat in particular suggests that the development of agriculture was not just a matter of people learning about plants or the “discovery” that if things are planted, they will grow. They surely already knew that. Instead, domestication involved a long, continuing interaction between, on the one hand, human beings tending to concentrate wild plant species important to their diet and, on the other, the plant mutations that made locally prominent populations of these wild species into more and more dependable and productive varieties, suitable to play a bigger and bigger role in human diet.

Half a century ago, archaeologist Kent Flannery made the point in the following way:

With the wisdom of hindsight we can see that when the first seeds had been planted, the trend from “food collecting” to “food producing” was under way. But from an ecological standpoint the important point is not that man planted wheat but that he (i) moved it to niches to which it was not adapted, (ii) removed certain selective pressures of natural selection, which allowed more deviants from the normal phenotype to survive, and (iii) eventually selected for characters not beneficial under conditions of natural selection.

All that the … process did for the prehistoric collector was to teach him that wild wheat grew from seeds that fell to the ground in July, sprouted on the mountain … in February, and would be available to him in usable form if he arrived for a harvest in May. His access to those mature seeds put him in a good position to bargain with the goat-hunters in the mountain meadow above him. He may have viewed the first planting of seeds merely as the transfer of a useful wild grass from a niche that was hard to reach —like the [rock slope] below a limestone cliff— to an accessible niche, like the disturbed soil around his camp or on a nearby stream terrace. (Flannery 1965: 261.) [Source]

In other words, like other great but gradual transformations of the human condition, the beginnings of agriculture probably did not seem like very much of an innovation to the participants. The agricultural “revolution” was not revolutionary in its suddenness, but in its ultimate importance.

Return to top.

E. Intermediate Adaptations: Hunting Gardeners

In fact, the term “revolution” in the expressions “Neolithic revolution” and “agricultural revolution” has always troubled many archaeologists. Significant as the difference is between a typical foraging society and a typical Neolithic one, there is nevertheless a full range of intermediate adaptations, both among modern peoples and in the archaeological record. In fact some peoples today still lead a life based in a mix of foraging and gardening

The carefully studied Yąnomamö of the Amazon jungle in Venezuela, for example, raise gardens for their principal sustenance, but continue to hunt wild animals, as do most dwellers in the Amazon rain forest. So do the mountain-dwelling peoples of Papua New Guinea. So do many other peoples.

Importantly, Yąnomamö gardens are unable to sustain them very many seasons in the same location, and they therefore must move from one village site to another over time. They are not nomadic, exactly, but they are not quite settled either, and the ethnographer Napoleon Chagnon developed a complex model of the long-term effects of this adaptation as population gradually rose, requiring more “administration” as different groups began to impinge on each other’s room for expansion or movement (Chagnon 1983) [Source].

Even when such tropical gardening peoples have sustained continuous village residence far more successfully, they usually have a history of village movement and exhibit relatively few of the characteristics of developed agricultural societies. And their movements tend to bring them into conflict with similar neighboring groups of foragers.

In some cases a group might be agriculturalists part of the year but depend upon hunting the rest of the year. This was the case with the Hidatsa and Mandan peoples of the North Dakota at the time they were first described in print. They engaged in maize (corn) agriculture near their permanent earth lodges during the summers.

But when the sub-zero temperatures and howling snowstorms of winter came, they moved into bark structures in river valleys to get out of the wind, and depended upon bison (“buffalo”) hunting for sustenance. (Bison became especially important after the Europeans brought shotguns and reintroduced horses to North America, which made hunting bison increasingly efficient until herds were diminished by overhunting.) In spring, melting snow raised the rivers and washed away the winter houses as people returned to their earth lodges to plant maize.

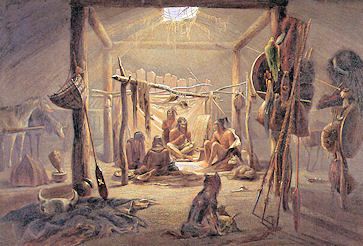

Hidatsa Winter Buffalo Hunt

on Snowshoes

George Catlin, 1810

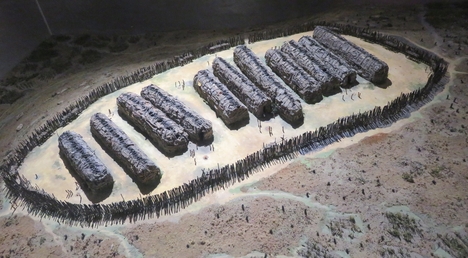

The Algonquian tribes north of Lake Erie, in the area that today is Ontario and Quebec, built substantial longhouses clustered inside defensive double palisades. These were usually occupied for several years, but they always eventually had to be abandoned when resources —both fields and prey— gradually became depleted. The challenge of a whole small village moving over the landscape in quest of better resources is part of why surrounding villages needed palisades.)

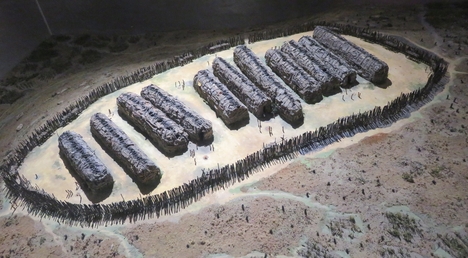

Algonquian Longhouse Villages

Above: Museum Model of Palisaded Longhouse Village

(Museum of Ottowa Archaeology, London, Ontario)

Right: Inside the double palisade of Iroquoian reconstructed village

(Centre d'Interprétation du Site Archéologique, Droulers, Québec)

Return to top.

F. Intermediate Adaptations: Natufians & Other Terminal Foragers

Archaeologically, in most areas where we can trace the dawn of agriculture we do so among people who were terminal foragers, that is, who were foragers who knew about gardening and raised a few crops, but did not depend significantly on them for most of their diet. In the Near East, in the area around Jarmo, for example, many sites are associated with so-called Natufians, a name given to various groups across the Near East practicing a terminal foraging adaptation in which wild grains were harvested, processed with grinding stones, and consumed as beer, and eventually perhaps as porridge or bread. Clearly the Natufians were on the edge of agriculture.

One example of a site with such a “terminal foraging” adaptation is Abu Hureyra in Syria, occupied from about 9500 BC to 8100 BC —for over a thousand years— by foragers. It was home to as many as 300 people at a time, at least some of them year-round, who included wild grains as a routine part of their diet. People ceased to live there about 8100, but the same site was re-occupied by other people, no longer called Natufians, five hundred years later —for nearly 2500 years— from 7600 to about 5000 BC. Vegetable remains from this second occupation were mostly domesticated, and by careful dating of remaining animal bones, it is possible to trace their meat supply from entirely hunted meat at the beginning of this occupation through the incipient domestication of sheep and goats at the end.

Natufians, being terminal foragers, are not normally referred to as Neolithic, but they were followed by people who are called Neolithic. However, the earliest Neolithic societies of the Near East still lacked pottery, which became a hallmark of Neolithic life in the same area later. The site of Jericho, for example, the lowest layers of which look very much like Jarmo, contains levels identified as Pre-Pottery Neolithic, divided into subtypes A (PPN-A) and B (PPN-B). At ’Ain Ghazal, in Jordan, there is even a Pre-Pottery Neolithic C (PPN-C) level, suggesting quite long “semi-Neolithic” occupation when inhabitants depended largely upon cultivated crops, but without pottery, and when they therefore still lacked what was to become the “full Neolithic way of life” in that region.

All terminal foragers —and all Neolithic peoples for that matter— continued to engage in hunting, fishing, and the collection of wild plants. Probably many —perhaps most— Neolithic communities could not have survived in some years without ocean, lake, or river resources as a supplement to what they were able to raise. [Note 7]

To summarize, the “Neolithic Revolution,” despite its name, was a gradual process. Neolithic people were quite unaware that they were being Neolithic. And the thousands of small innovations that in retrospect we can see as part of the transition were probably all considered trivial when they occurred. The latest foraging society was just as complex as the earliest gardening society, and it is not entirely accurate to say that agriculture “caused” a Neolithic mentality any more than that a late-foraging mentality “caused” agriculture!

Return to top.

3. The Spread of Farming & the

“Wave of Advance”

The transformation may not have been quite so gradual in areas to which agriculture spread once it had appeared. Europe, for example, did not develop agriculture de novo, but “received” it from the Near East, and the process went faster. One model of how this happened, associated with the work of L. Luca Cavalli-Sforza at Stanford University, suggests that growing Near Eastern populations, finding a need for more and more land, slowly expanded outward, especially into Europe, gradually moving into more and more of that continent, and driving out or assimilating the thin population of foraging peoples that had been there before. This wave-of-advance model, based on the distribution of earliest agricultural sites in Europe, envisions the leading edge of the agricultural way of life, like the leading edge of a lava flow or a glacier, moving northwestward at about one kilometer per year, and reaching northwestern Europe about 4000 BC. (A variant of the same model sees “population pulses” moving, not evenly, but in fits and starts across Europe. That fits a bit better with the actual dates of sites, but requires an explanation for the irregularity of the movement.)

In the “wave-of-advance model, ” the transition to farming in any local area is often relatively abrupt, and we must think of a change from hunting in the forest to cutting down the forest to plant fields as being complete in any given area in as little as five or six generations. Foragers would have assimilated, converted, or been killed or starved. That of course makes the “Neolithic Revolution” revolutionary not just in impact, but also in the sense of being very fast in each area it swept over. (However it still would have been relatively uninteresting to participants; the span of five or six generations —about 150 years— is a long time.)

People or Ideas? A second, more recent model modifies the original “wave-of-advance” view and proposes the movement, not of people, but for the most part of ideas. (The ideas would still have moved, like the migrants in the previous model, at a rate of about one kilometer per year.) It postulates that the understandings and techniques of agriculture were gradually adopted by the European foragers as a kind of insurance for their food supply. This model postulates that population densities rose as a result, eventually making the former nomadic foragers into settled farmers, completely dependent upon agriculture.

Neither view entirely excludes the other of course. Studies since 2000 offer evidence in both directions. The data base of agricultural village sites supporting the original wave-of-advance model has been vastly expanded, from Cavalli-Sforza’s initial 53 sites to over 700. It continues to support the general rule of thumb of one kilometer per year “flow” northwestward, and demographers project that natural population increase could have supported that. Some researchers argue that if ideas alone had been involved, agriculture should have spread much faster, concluding that therefore the process must have been population movement.

On the other hand, a 2005 mitochondrial DNA study of twenty-four bodies from European farming villages dating from about 5500 BC reveals less similarity with modern Europeans than expected if modern Europeans were descended from them. That suggests an early population picking up agriculture and then being displaced by ancestors of modern Europeans, which is puzzling. Some have speculated that immigrating male farmers might have tended to marry foraging women, leaving us to wonder what became of the foraging men. (Bower 2005). [Source]

Obviously, understanding the expansion of farming into Europe will require more information and, in the end, probably a far more complex model. The origin of agriculture in other regions is just as challenging. The “bottom line,” however, is that the spread of agriculture was a different and probably faster process than the “invention” of agriculture.

Twenty-two interactive, multiple-choice review questions are available for this reading. They are organized into three "wimp" quizzes, two "normal" quizzes, or one "hero" quiz:

Go to

Essay 2,

Essay 3,

Metal Ages,

Mesopotamia.

Return to top.